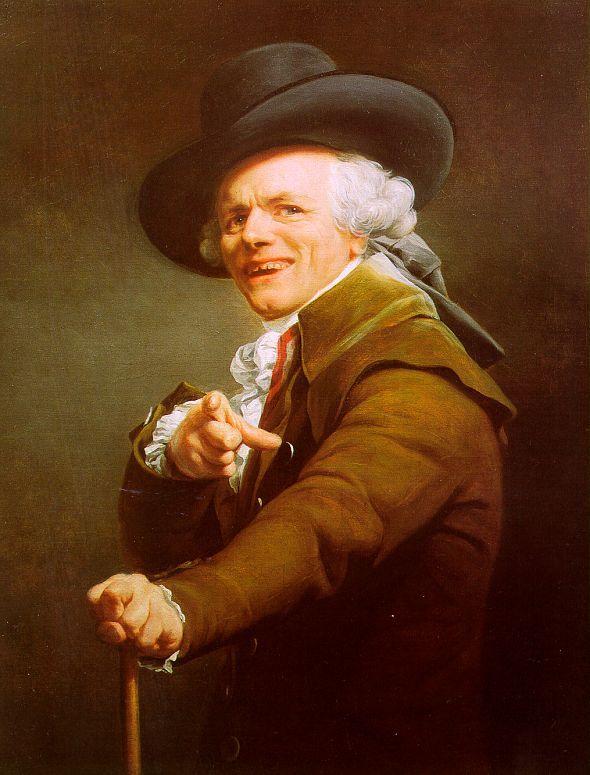

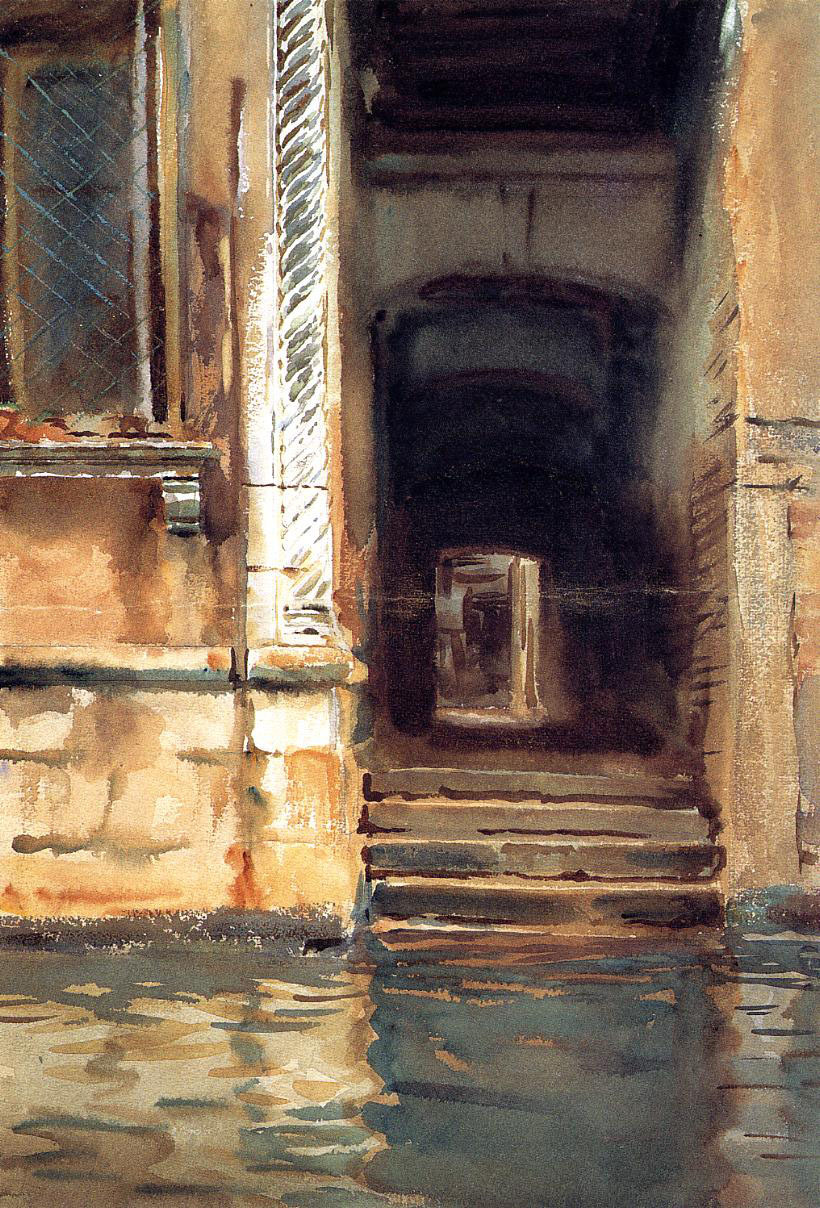

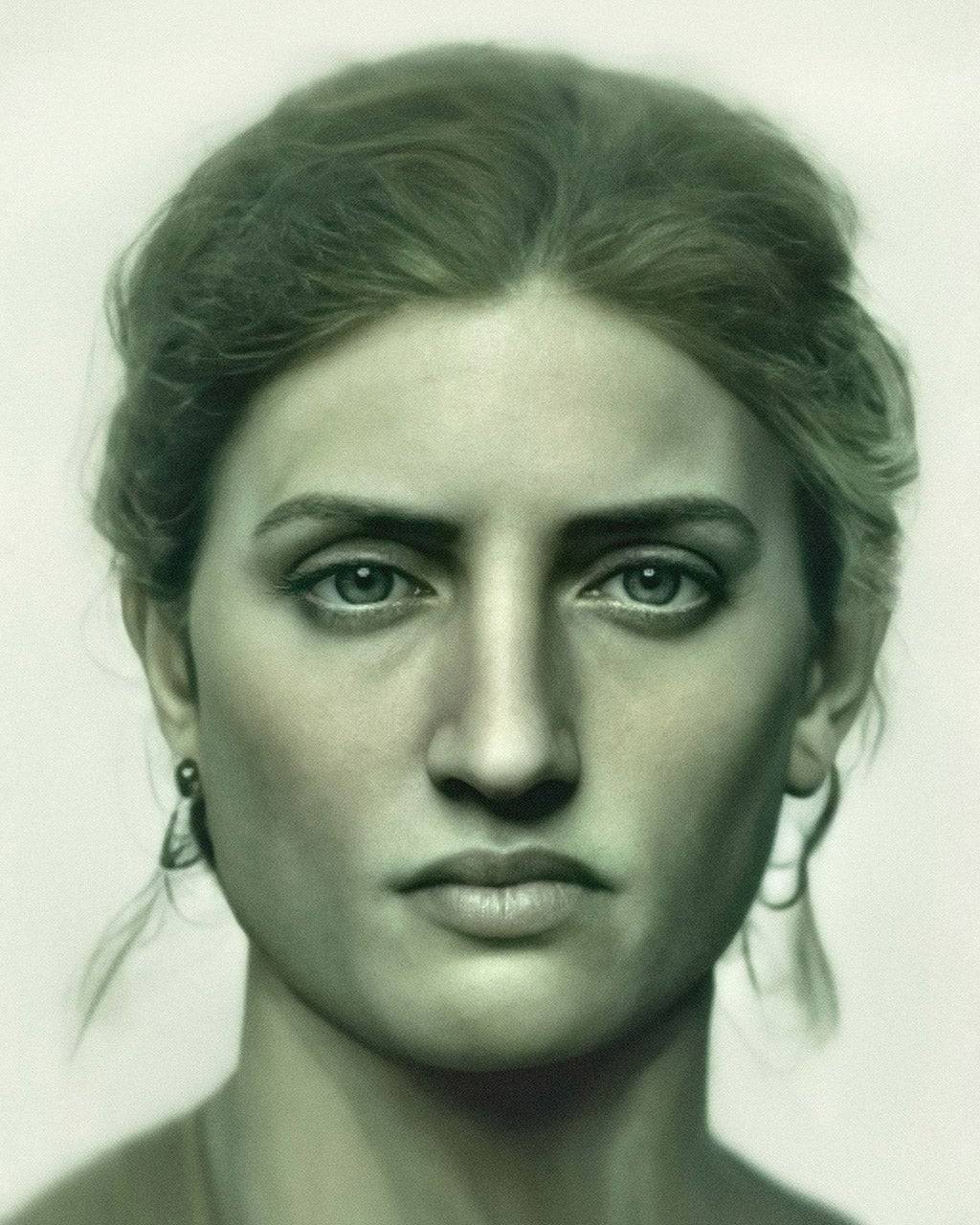

Ducreux, Self-Portrait in the Guise of a Mockingbird, 1791

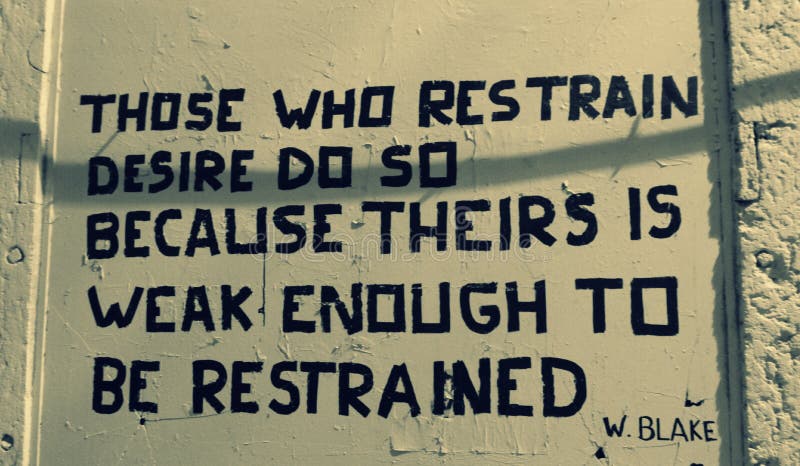

You can’t figure out why an AI is generating a hallucination by asking it. It is not conscious of its own processes. So if you ask it to explain itself, the AI will appear to give you the right answer, but it will have nothing to do with the process that generated the original result. The system has no way of explaining its decisions, or even knowing what those decisions were. Instead, it is (you guessed it) merely generating text that it thinks will make you happy in response to your query. LLMs are not generally optimized to say “I don’t know” when they don’t have enough information. Instead, they will give you an answer, expressing confidence.Ethan Mollick, from Co-Intelligence: Living and Working with AI

No comments:

Post a Comment