After OpenAI cofounder Greg Brockman's 2023 TED Talk, the head of TED, Chris Anderson, pushed him on the potential risks of insufficient feedback used to develop the patterns used in artificial intelligence's training ...

Anderson: Why isn't there just a huge risk of something truly terrible emerging?

Brockman: Well, I think all of these are questions of degree and scale and timing. And I think one thing people miss, too, is sort of the integration with the world is also this incredibly emergent, sort of, very powerful thing too. And so that's one of the reasons that we think it's so important to deploy incrementally.And so I think that what we kind of see right now, if you look at this talk, a lot of what I focus on is providing really high-quality feedback. Today, the tasks that we do, you can inspect them, right? It's very easy to look at that math problem and be like, "No, no, no, machine, seven was the correct answer." But even summarizing a book, like, that's a hard thing to supervise. Like, how do you know if this book summary is any good? You have to read the whole book. No one wants to do that. (nervous laughter, awkward silence)

The exact way the post-AGI world will look is hard to predict — that world will likely be more different from today's world than today's is from the 1500s. We do not yet know how hard it will be to make sure AGIs act according to the values of their operators.

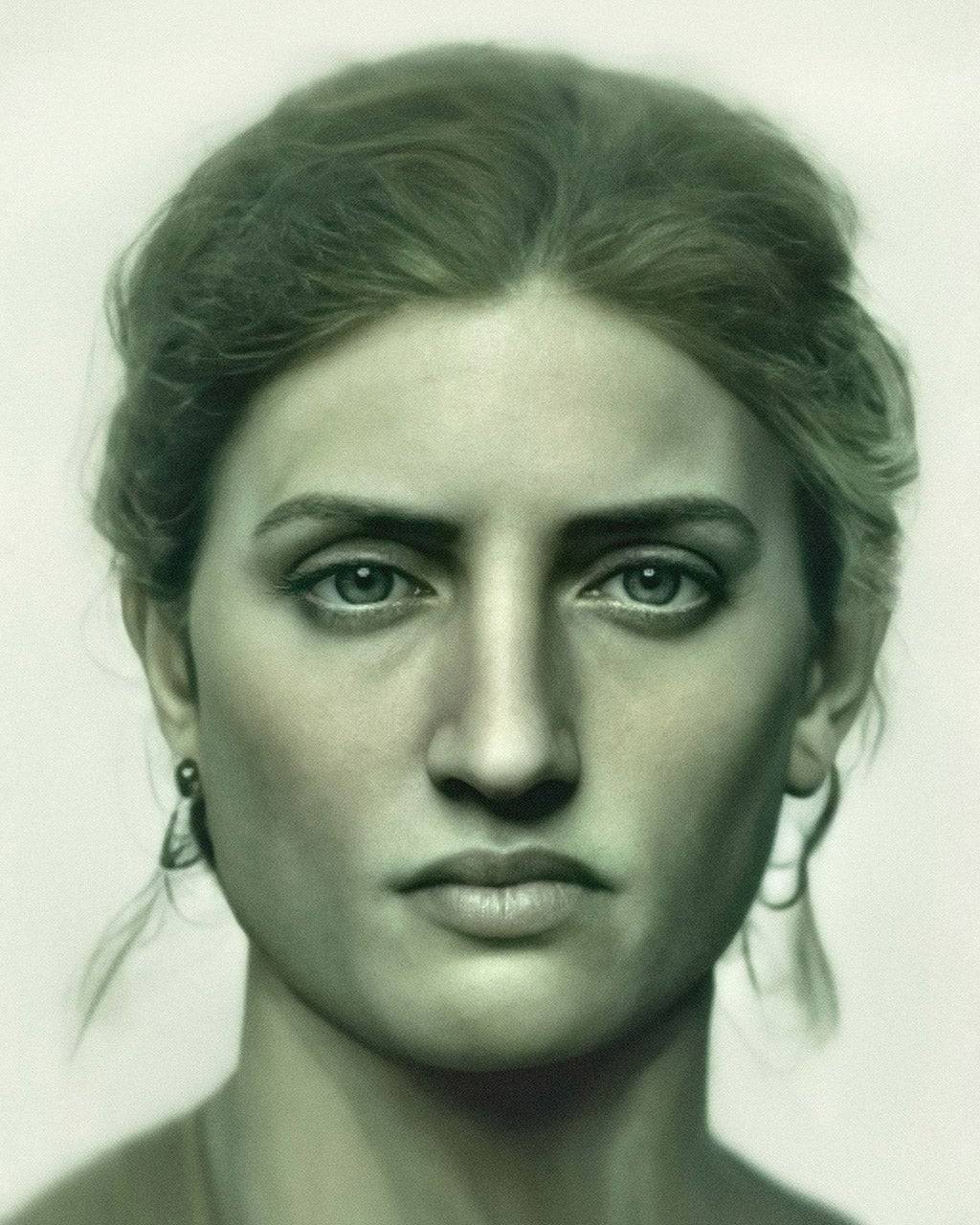

Greg Brockman, from his testimony delivered to the U.S. House of Representatives in June 2018

No comments:

Post a Comment